Latest Features of Human Robots

A robot is a programmable machine equipped with sensors, actuators, and a control system that enables it to interact with its environment, perform tasks, or carry out specific functions. The design of robots varies widely, from industrial arms on assembly lines to autonomous drones, from robotic vacuum cleaners to humanoid companions with advanced artificial intelligence.

Artificial Intelligence

Artificial Intelligence (AI) stands at the forefront of technological innovation, transforming the way we live, work, and interact with the world. At its core, AI refers to the development of computer systems that can perform tasks requiring human-like intelligence. This encompasses a spectrum of capabilities, from basic rule-based systems to advanced machine learning algorithms that mimic cognitive functions.

Foundations of AI:

AI draws inspiration from human intelligence, aiming to replicate aspects of learning, reasoning, problem-solving, perception, and language understanding in machines. The field is interdisciplinary, blending computer science, mathematics, neuroscience, and engineering to create intelligent systems.

Types of AI:

.webp)

AI is often categorized based on its capabilities and functions:

Narrow AI (Weak AI):

Narrow AI is designed to perform a specific task or a set of closely related tasks. Examples include virtual assistants, image recognition systems, and recommendation algorithms.

General AI (Strong AI):

General AI represents a level of artificial intelligence that can understand, learn, and apply knowledge across diverse domains, akin to human intelligence. Achieving true general AI remains an aspirational goal.

Machine Learning:

A significant driver of AI advancements is machine learning, a subset of AI that focuses on creating algorithms capable of learning from data. The three main types of machine learning are:

Supervised Learning:

In supervised learning, the algorithm is trained on a labeled dataset, where the input and corresponding output are provided. The model learns to map inputs to outputs, making predictions on new, unseen data.

Unsupervised Learning:

Unsupervised learning involves training algorithms on unlabeled data, allowing the system to find patterns and relationships independently. Common techniques include clustering and dimensionality reduction.

Reinforcement Learning:

Reinforcement learning involves an agent learning to make decisions by receiving feedback in the form of rewards or penalties. The agent explores different actions to maximize cumulative rewards over time.

Natural Language Processing:

NLP is a crucial aspect of human-robot interaction, enabling robots to understand, interpret, and generate human language. Here are some of the latest features and advancements in NLP for humanoid robots:

Conversational Understanding:

Humanoid robots are becoming more adept at understanding natural, contextually rich conversations. Advanced NLP models allow robots to comprehend nuances, context shifts, and multi-turn dialogues.

Context Awareness:

Improved context awareness enables robots to better understand and respond to user queries based on the ongoing conversation. This involves considering the conversation's history and retaining relevant information.

Multilingual Capabilities:

Some humanoid robots are equipped with advanced NLP models that support multiple languages. This enables them to interact with users from diverse linguistic backgrounds, contributing to inclusivity and accessibility.

Sentiment Analysis:

Sentiment analysis capabilities allow robots to discern the emotional tone of user input. This feature enables them to respond appropriately to positive, negative, or neutral sentiments, enhancing the quality of interactions.

Ambiguity Resolution:

Advanced NLP algorithms assist robots in resolving ambiguous queries by considering context, user history, and additional contextual cues. This helps in providing more accurate and relevant responses.

Speech Recognition Improvements:

Enhanced speech recognition technology contributes to improved NLP in robots. Accurate transcription of spoken words is crucial for understanding user inputs and generating appropriate responses.

Natural Language Generation (NLG):

NLG capabilities enable humanoid robots to generate human-like responses in natural language. This goes beyond simple predefined phrases, allowing for dynamic and context-aware generation of responses.

Personalization and User Profiling:

Some humanoid robots leverage NLP to create user profiles and personalize interactions. Understanding user preferences, speech patterns, and historical interactions contributes to a more tailored and engaging experience.

Enhanced Chatbot Functionality:

Humanoid robots with integrated chatbot functionality benefit from advanced NLP models. These chatbots can engage in meaningful conversations, answer queries, and provide assistance in various domains.

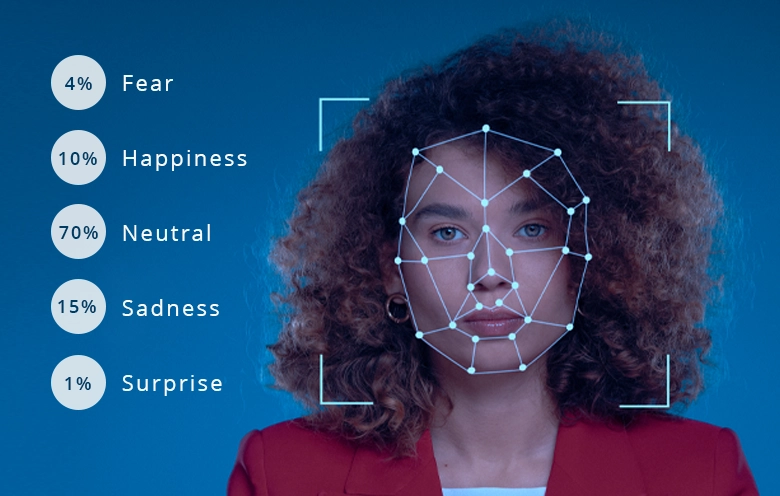

Emotion recognition

Emotion recognition is a branch of artificial intelligence (AI) that focuses on deciphering and understanding human emotions through various technological means. The goal is to develop systems capable of identifying, interpreting, and responding to the emotional states of individuals, often in real-time. This field has gained prominence due to its wide-ranging applications in diverse domains, including human-computer interaction, healthcare, education, and marketing.

Key Components of Emotion Recognition:

Facial Expression Analysis:

Facial expressions are a rich source of emotional cues. Emotion recognition systems use computer vision algorithms to analyze facial features, such as eyebrow movement, eye widening, or lip curvature, to infer emotional states.

Speech Analysis:

Emotion can be conveyed through speech patterns, intonation, and voice quality. Speech analysis algorithms assess pitch, tone, rhythm, and the use of certain words to identify emotional cues in spoken language.

Physiological Signals:

Physiological signals, such as heart rate variability, skin conductance, and muscle activity, provide insights into emotional arousal and stress levels. Wearable devices and sensors can capture these signals for emotion recognition.

Gesture Recognition:

Human gestures, including body language and hand movements, offer valuable information about emotional states. Gesture recognition technologies interpret these movements to infer emotional cues.

Biometric Data Integration:

Emotion recognition systems often integrate multiple modalities, combining data from facial expressions, speech, and physiological signals to enhance accuracy and reliability.

Human-Robot Interaction (HRI) Design: Crafting Seamless Connections in the Digital Realm

Human-Robot Interaction (HRI) design is a multidisciplinary field that focuses on creating interfaces and systems that facilitate meaningful and effective communication between humans and robots. The goal is to design interactions that are intuitive, engaging, and align with human expectations, fostering positive and collaborative relationships between users and robots. HRI design encompasses a range of elements, including physical design, user experience (UX), cognitive ergonomics, and ethical considerations.

Key Principles of HRI Design:

User-Centered Design:

HRI design places users at the center of the development process. Understanding user needs, preferences, and expectations is fundamental to creating interfaces that resonate with human users.

Intuitiveness:

Interfaces should be intuitive, allowing users to interact with robots in a natural and straightforward manner. This involves minimizing complexity and ensuring that users can easily understand and predict robot behavior.

Adaptability:

HRI systems should be adaptable to different users and contexts. Consideration of diverse user abilities, cultural backgrounds, and environmental conditions is essential for creating inclusive and versatile designs.

Transparency:

Transparency in HRI design involves making the robot's actions and intentions clear to users. Users should have insight into how the robot perceives and processes information, fostering trust and predictability.

Feedback Mechanisms:

Providing feedback to users about the robot's state, actions, and intentions is crucial for effective communication. Visual, auditory, and haptic feedback mechanisms contribute to a richer interaction experience.

Collaboration and Cooperation:

HRI design often targets collaborative scenarios where humans and robots work together. Designing interfaces that promote cooperation, shared understanding, and effective communication enhances overall system performance.

User Empowerment:

Empowering users involves giving them control and agency in the interaction. Designing interfaces that allow users to influence and guide the robot's behavior contributes to a more satisfying and user-friendly experience.

However, as we celebrate these milestones, it is crucial to tread carefully, mindful of the ethical considerations that accompany this technological evolution. Privacy concerns, transparency in decision-making, and the responsible use of artificial intelligence demand our attention. Striking the delicate balance between innovation and ethical stewardship will define the trajectory of human-robot relationships in the years to come.

In conclusion, the latest features of human robots propel us into an era where the collaboration between humans and machines is not just a technological feat but a profound exploration of our shared future. As we stand at the intersection of innovation and responsibility, the evolution of human robots invites us to reimagine the possibilities of human-machine coexistence, fostering a harmonious synergy between the artificial and the human.

Post a Comment

0 Comments